Monitoring the performance of your web application is key to having a nice user experience, even when more features get added with time. Plenty of case studies show that performance is directly related to happy and returning customers. Like Zalando for instance, which increased revenue by 0.7% due to improving their load time by only 100ms (link here).

There's a huge amount of metrics out there that can give you a good understanding of your web app's performance. Things like Time to First Byte or Time to Interactive do a good job of describing the user experience.

Custom metrics take this one step further by giving a more precise measurement of what the user is experiencing. Twitter, for example, implemented a metric called Time to First Tweet. Metrics like this are also easier to understand for non-technical people and should focus on what brings business value. With this, an online shop could implement a custom metric that measures the Time to First Article.

Nevertheless, custom metrics should not be seen as a replacement but as a complementary metric to an already-in-place performance monitoring system.

In this article I will show you how you can integrate custom metrics into your CI flow, using TravisCI and WebPageTest.

Related to this, I explained in another article some JavaScript APIs that you can use during development for measuring the performance of JavaScript functions.

For the examples in this tutorial, I will use a personal project of mine, a Pomodoro Timer App built with React for studying and working.

Tutorial

Taking measurements in the source code

We start off by adding performance.mark to the part of our source code that we want to measure.

You could place this in your application after you fetched and

rendered user-relevant data from an API. For this tutorial, I measured the time it takes for the

timer screen of my Pomodoro Timer to mount.

Remember the string you pass as an argument to the performance.mark function. You will need it

when accessing the data later on.

componentDidMount() {

// You might fetch some data here

performance.mark('timer-screen');}That's already everything you need to do regarding the modification of your JavaScript source code. Now we continue with setting up WebPageTest.

WebPageTest

WebPageTest an open source project that helps you measure the performance of your web application. As stated on their website:

WebPageTest is an open source project that is primarily being developed and supported by Google as part of our efforts to make the web faster.

Isn't WebPageTest only a website?

When you google for WebPageTest, the first thing you see is probably the online interface webpagetest.org. This already allows you to get thorough insights into your website's performance.

But there's more to it. All the features the UI provides (and more) are accessible through a REST API. Using this API gives you a very detailed analysis of your website's performance. In fact, it has so many features that there is an entire book about this topic.

In this article, I'll only scratch the surface of what is possible.

Setup

Get an API key

First of all, you need to get an API key for your project. Go to this page to get one. The API is entirely free and allows up to 200 tests per day.

Install the WebPageTest CLI (optional)

If you want to try out the API key, the easiest way to do so is with the CLI tool. Install it first and then run a test with your API key:

npm install webpagetest -g

webpagetest test https://pomodoro-timer.app --key [your-api-key]You should get a JSON response containing "statusCode": 200.

Query the WebPageTest API with a script

Now we need to set up a script that requests an analysis of our website, using the WebPageTest API.

We will be using NodeJS and a WebPageTest API wrapper.

A new file called webpagetest.js will contain the script, which can be executed by running node webpagetest.js.

We need to install webpagetest as a project dependency by running yarn add webpagetest.

You can get a full example here.

const WebPageTest = require('webpagetest');

// Instantiate WebPageTest with your own API key

const wpt = new WebPageTest(

'https://www.webpagetest.org/',

'your-api-key',

);

wpt.runTest('https://pomodoro-timer.app', {

runs: 3,

location: 'ec2-eu-central-1',

firstViewOnly: true,

}, (err, result) => {

console.log(err || result);

});Note that the location is set to a European server. You might want to adjust this depending on where your server is located.

Run this command to get a list with all available locations running:

webpagetest locations [your-api-key]The callback of the runTest function returns the same object as running webpagetest test in the terminal did.

We are especially interested in the testId since we'll use this for checking if the test finished.

Wrap the functions in promises

I chose to wrap the WebPageTest functions in Promises so I don't get into too many levels of nesting (aka callback hell).

The runTest function for example then looks like this:

const runTest = () => {

return new Promise((resolve, reject) => {

wpt.runTest('https://pomodoro-timer.app', {

runs: 3,

location: 'ec2-eu-central-1',

firstViewOnly: true,

}, (err, result) => {

if (result && result.data) {

return resolve(result.data);

} else {

console.error(err);

return reject(err);

}

});

});

};And can easily be called from an asynchronous main function like this:

const main = async () => {

try {

const result = await runTest();

// do something with the result

} catch (e) {

console.error(e);

}

);

main();For the sake of simplicity I'll leave this out in the coming code samples. The entire script can be found in this GitHub gist.

Wait for the test to finish

The runTest function already provided us with a test ID (result.data.testId) we can use to check if the test

we started earlier finished.

The WebPageTest API wrapper provides us with the getStatus function. We need to call this function until it

tells us that the test finished. To do so, we need to wrap it into an interval like that:

const intervalId = setInterval(() => {

wpt.getTestStatus(testId, (err, result) => {

// test didn't finish yet, print the status text

if (result && result.statusCode !== 200) {

console.log(result.statusText);

}

if (result && result.statusCode === 200) {

// clear the interval before resolving the promise

clearInterval(intervalId);

resolve();

}

if (err) {

// clear the interval before resolving the promise

clearInterval(intervalId);

reject(err);

}

});

}, 2_000);Fetch the test result

When the above function resolves, we can fetch the final result with the testId we got when initiating

the test:

wpt.getTestResults(testId, (err, result) => {

if (!err) {

console.log(`

Time to timer screen:

${finalResult.data.average.firstView['userTime.timer-screen']}ms

`);

return resolve(result);

} else {

console.error(err);

return reject(err);

}

});The result object contains a lot of information and insights about the webpage's performance, but for now, we're

interested in our custom metric. This object gives you results for every executed test, but since we've run the test

three times, we'll have a look at the average. finalResult.data.average.firstView['userTime.timer-screen'] contains

our custom metric.

This returns the result of our performance mark in milliseconds.

For a visual representation of the result, open the link at result.data.summary which also includes our custom metric.

TravisCI

Now that we have a script that gives us access to our new metric it's time to integrate it into our CI pipeline.

If you don't use Travis for your project you might skip this part and jump to the conclusion. (Of course, you can continue reading anyway and port everything "Travis-specific" to the CI tool/ technology you use).

Travis allows you to specify jobs and in which order you want to run them. We will set up a job that tests the web application after deployment. However, if you have a staging environment, it would make sense to do these tests there too, to prevent performance issues hitting production in the first place.

Encrypt the API key

We don't want our API key to be in the source code, especially if the project is open source. Travis has an easy to use CLI tool that generates this for us.

travis encrypt WPT_API_KEY="your-api-key" --add env.globalThis will automatically add it to your .travis.yml. You are now able to access it in your application like this: process.env.WPT_API_KEY.

Run the test as a Travis job

If you had no additional jobs in your Travis config file before, you might have to do a little reordering now.

Move most of your configuration has to be moved into one job. Common things like for example the language stay outside of our jobs.

I'll explain this with comments in my .travis.yml:

language: node_js

node_js:

- 12

# We cache the node_modules folder, so travis doesn't

# need to install all dependencies twice

cache:

yarn: true # Delete this this if you're using npm

directories:

- node_modules

env:

global:

- secure: [your-encrypted-API-key]

jobs:

include:

# That's the job that's deploying your application

# All your previous configuration goes here

- stage: deploy

[...] # your configuration

# That's the name that will show up in the travis interface

- stage: webpagetest production

# Change this with the name of your staging branch

if: branch = master

# That's where the script is being executed

# My script is located in the scripts folder

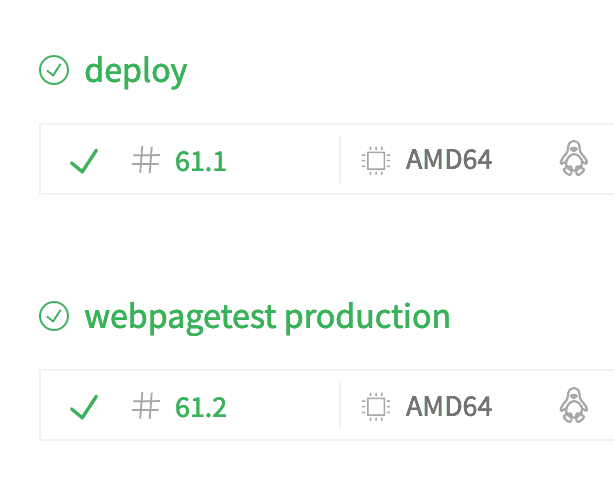

script: 'node ./scripts/webpagetest.js'With this setup, you will see that two jobs will show up for every build, one that is deploying the application and another one that runs the WebPageTest script.

Decide if or when the build fails

If you run this now the new build will always pass since we only run a WebPageTest but don't let the build failed based on some condition.

We can change this by setting a threshold for our custom metric and exiting the Node process that runs our script with an error:

// Too slow, let job fail

if (ttfrTimer > 2200) {

process.exit(1);

}Here I set the threshold to 2200ms. You might want to adjust this value. Be aware that this value can change a lot, depending on factors like the load on the server and network connection, so it might not always be a good idea to let the build fail straight away.

Since the test job runs after the deploy job, the WebPageTest might fail but the first job will deploy your application regardless of the result of your custom metric.

Another option would be to report the results to some other service like for example Slack.

Conclusion

As this article shows, not all metrics are important and you should only implement metrics like this when it makes sense.

There are no boundaries set to what you can do with the results that WebPageTest gives you. WebPageTest is also being used by Speed Curve, which also leverages their custom metrics.

This tutorial is by no means comprehensive and is intended to help you start experimenting with the implementation of custom metrics.

What kind of custom metric would you like to implement for your current projects? Do you think they make sense at all? I would be happy to read some of your ideas on twitter.